Test Environment

If possible it is better to get yourself on a VMware course but as times are hard companies are reluctant to provide training. I have setup a small test rig that allows me to play around with many of the VMware features, apart from Fault Tolerance (unless you want to spend lots of money) my rig will be able to handle the following

I have tried to keep my setup as cheap as possible, the only restriction you have is that the hardware must support 64-bit and supports virtualization. The other requirement is the SAN, in this case I have used an old PC as a iSCSI SAN using the openfiler software, this allows all ESXi servers to access the shared storage.

Here is what I have purchased, I paid about £700 for the lot which is still not cheap but cheaper than a VMware course. What I am building is not supported by VMware but it does run well and enables me to learn VMware ESXi server and many of the features that's comes with the ESXi server.

ESXi Servers |

I have purchased two HP DC 7800 desktop PC's with the following configuration

I will be naming my servers vmware1 and vmware2 |

iSCSI SAN (openfiler) |

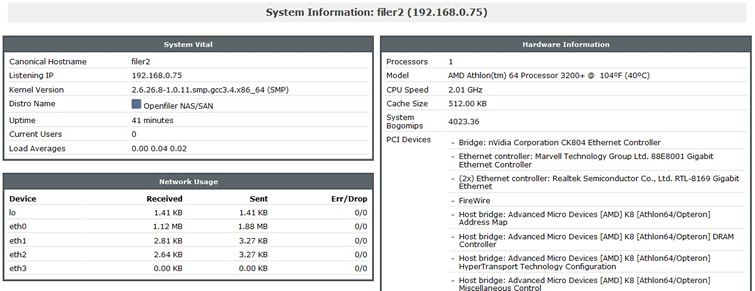

I have used an old PC that I built myself many years ago which has the following configuration, it's not powerful but actually runs very well.

I will be naming my openfiler server filer1 |

Networking |

I have two netgear GS608 8 port gigabit switch/router/hub. One will be used for the public network, the other will be used for both the iSCSI network and the private network. In a ideal world you would split the iSCSI traffic and private network on to two different switches but I have tried to keep the costs down |

KVM and Monitor |

I have used an old 14" LCD monitor and attached to a 4 port KVM switch and then connected this to both the ESXi servers and the openfiler server. |

We will be setting up a vCenter server, this will be created as a VM in one of the ESXi hosts which is now the preferred method of VMware, I will be using a trial windows 2008 server O/S. There are not many options when it comes the vCenter so check out VMware documentation on what is supported.

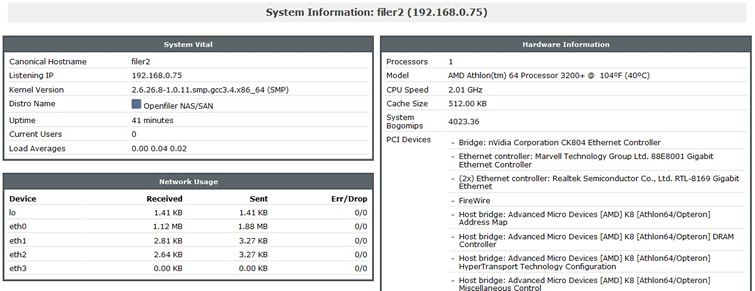

I have setup the following networking, I am not going to create a diagram as it is too small of a network, I only have two routers. You might have noticed that I have two network connections for the openfiler server my plan is to perform some testing of the I/O failover in VMware, its a crude method but it works to explain how VMware handles network failures.

Main PC |

openfiler |

vmware1 |

vmware2 |

Notes | |

Public LAN |

192.168.0.60 attached to port 1 |

192.168.0.75 attached to port 2 |

192.168.0.190 attached to port 3 |

192.168.0.191 attached to port 4 |

for the public LAN I will use one of the netgear GS608 routers |

iSCSI LAN |

n/a |

192.168.1.75/192.168.1.76 attached to port 1 & 2 |

192.168.1.90 attached to port 3 |

192.168.1.91 attached to port 4 |

for the iSCSI network I will use the first 4 ports on the second GS608 router |

Private LAN |

n/a |

n/a |

192.168.2.90 attached to port 7 |

192.168.2.90 attached to port 8 |

for the private network I will use the last 2 ports on the second GS608 router |

The openfiler server has two 1TB disks, that I will use for VMware storage, I am not going to explain how to create these volumes but point you in the direction of the openfiler web site.

Shared storage across both ESXi servers |

|

| filer1_vm1_ds1 | Both these storage areas will be accessible to both ESXi servers, this will allow us to use vMotion. I have used two disks just in case you decide to setup a cluster for example a Veritas cluster, then each ESXi can have the VM's on different disks thus getting better performance. |

| filer1_vm2_ds1 | |

NON-shared storage used to backup specific ESXi servers |

|

| filer1_vm1_bk1 | I will create two areas that will allow us to backup VM's, these areas will not be shared |

| filer1_vm2_bk1 | |

Here are some snapshots of my openfiler configuration, hopefully you will get an idea on what I have setup, but its up to you on what your requirements are.

You don't need a very powerful system to run openfiler, as you can see I have a very basic server and it fine for testing purposes, it also works well with the ESXi server setup that I have.

The below image is my network setup, as you can see I have three network connections, one for the public network and two for the iSCSI network. I have also added the two ESXi servers to the access configuration which will be used during the iSCSI setup, all ports have been configured at 1000Mb/s.

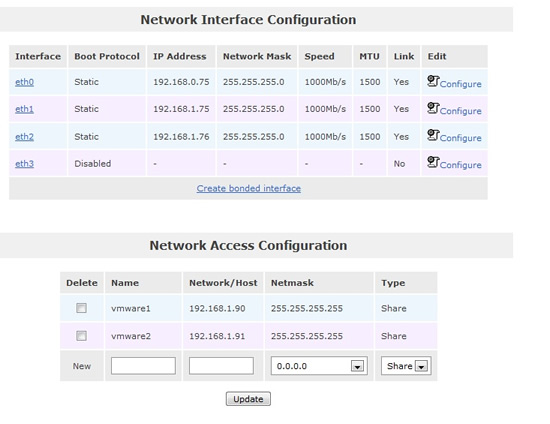

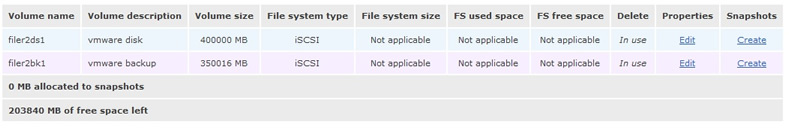

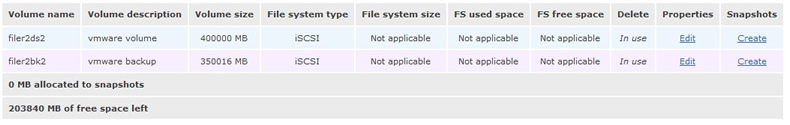

As I mentioned I have two 1TB disk to play around with, I have created two volume groups data01 and data02, each volume group has one disk.

Disk one I have created two volumes filer2ds1 which will hold VM's and filer2bk1 which will be used for backing up VM's

Disk two I have created two volumes filer2ds2 which will hold VM's and filer2bk2 which will be used for backing up VM's

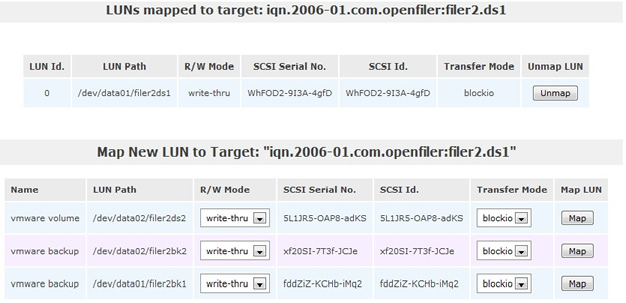

Once the volumes have been created you need to setup the iSCSI part, I have given my volumes target names like filer2.ds1, filer2.ds2 but its up to you on what naming convention you wish to choose. The image below shows one of the volumes mapped to the target, in this case volume filer2ds1 volume is mapped to target filer2.ds1

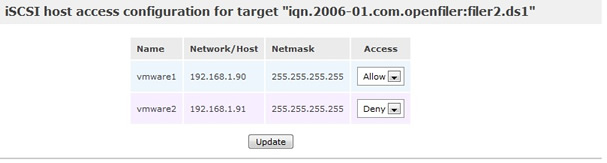

The last part is to allow access from the ESXi servers to your target, in this case I am allowing access from both ESXi servers to the filer2.ds1 target, which if you remember is the filer2ds1 volume.

That's pretty much it, openfiler is a very easy to use piece of software and I can highly recommend it for testing purposes (not for Production).

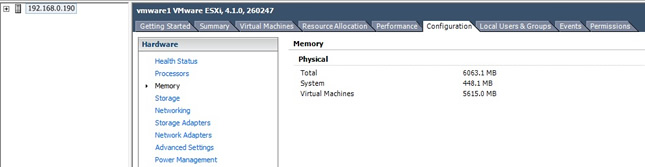

I have setup both the ESXi servers the same, using the internal 120GB disk as the O/S disk, I will be using the other internal 500GB disk as storage as well, sometimes you may just setup a ESXi with internal storage only (no SAN), so it gives us the opportunity to see what differences internal disks and SAN disks have. Each server will have 6GB of RAM, this will allow me to run multiple VM's on one ESXi server.

I am not going to show you how to setup the ESXi server has this has been done to death on the web, all I can say is it is very easy. I will however explain in detail on how to setup the iSCSI on an ESXi in my virtual storage section.

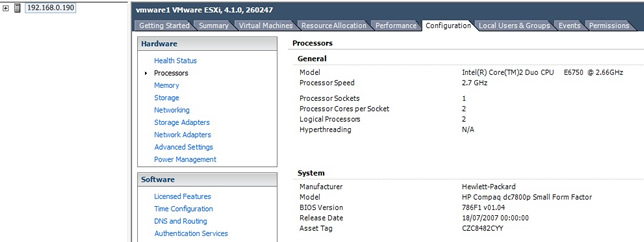

Here are some snapshots of my ESXi server setup, I will be going into greater details on how to setup networking, storage, etc for the time being here is quick summary on what I have. As you can see the server is dual core using a E6750 processor, the hardware is a HP DC 7800 desktop PC. It not very powerful but it is able to run a number of VM's simultaneously which will be handy when we start testing DRS.

The more memory the more VM's you can simultaneously run, I have 6GB in each ESXi server which is enough for a test environment

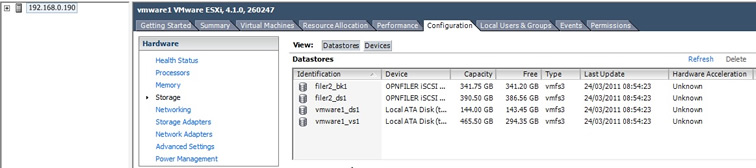

Don't worry to much about the storage at the moment, as I will be going into greater details on how to setup the storage in my storage section. As you can see I have the two iSCSI volumes (filer2_bk1 and filer2_ds1), I also have two internal disks in the HP DC 7800, vmware_ds1 which is what the O/S is residing on (I don't use this for any VM's) and vmware_vs1 which is a 500GB disk that I use for cluster VM's like Sun cluster, Veritas cluster, etc.

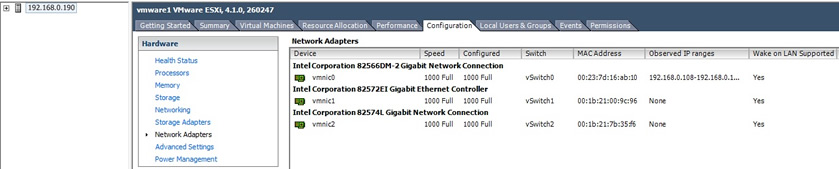

Lastly I have the NIC's, I have three network interfaces, one for the public network, one for the iSCSI network and a private network which will be used for vMotion, etc. Again I will go into greater detail in my network section.

In future sections I will show you how to setup all of the above and other features of VMware.

In the end you should have two ESXi servers setup with access to a public, private and iSCSI LAN, each server should have iSCSI storage (400GB and 350GB) and internal storage (500GB). You can change anything that I have mentioned above just make sure that the servers/desktop PC you buy for the ESXi servers do support 64-bit and supports virtualization.